According to the IBM Global AI Adoption Report, 82% of companies use or explore AI technologies. With this rapid growth, there is a need for regulatory standards to ensure responsible AI use. ISO/IEC 42001:2023, known as ISO 42001, is the first certifiable international standard governing AI management systems (AIMS). AIMS include policies and procedures for overseeing AI applications. The goal of ISO 42001 is to help organisations establish structured, ethical, and transparent AI systems that build customer trust and mitigate risks.

ISO 42001, developed by the International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC), is an international standard outlining the requirements for establishing, implementing, and continually improving Artificial Intelligence Management System (AIMS). This standard aims to mitigate AI-related risks like data inaccuracies, cybersecurity threats, and intellectual property issues. By integrating ISO 42001 into an organisation’s compliance program, businesses can enhance AI security, fairness, and transparency, ultimately fostering trust with customers and stakeholders.

Integrating ISO 42001 into your compliance management program offers significant advantages, particularly with the increasing focus on AI regulations. While not yet legally mandated, adopting ISO 42001 positions your organization ahead of impending regulatory frameworks, such as the EU AI Act and the U.S. AI executive order. These frameworks emphasize AI governance, transparency, and risk management, principles that closely align with ISO 42001. Proactive implementation can provide a competitive edge, showcasing responsibility, promoting sustainable AI governance, and building trust with stakeholders, all while preparing for future regulatory changes.

ISO 42001 provides a framework for creating ethical and transparent AI systems by requiring organisations to establish policies that govern the responsible development, use, and management of AI. These policies ensure that AI systems align with both internal goals and external regulations, such as data privacy and security requirements, and are regularly reviewed and updated to remain compliant with evolving standards.

By incorporating ISO 42001, companies can enhance the reliability and security of AI systems. This includes mitigating risks like data inaccuracy, algorithmic bias, and intellectual property concerns through better lifecycle management, from development to deployment. Controls such as regular impact assessments, bias detection mechanisms, and audit trails help ensure that AI systems are fair, transparent, and free of unintended negative consequences.

Adhering to ISO 42001 fosters transparency and trust with customers, employees, and regulatory bodies. The standard emphasises clarity in AI decision-making processes, allowing stakeholders to understand and engage with how AI operates. This promotes long-term trust and ensures accountability in AI governance. Additionally, organisations adopting this standard are better positioned as industry leaders, particularly in light of emerging global AI regulations.

At the heart of ISO 42001 is the principle of trustworthy AI. The standard is designed around several key governance principles that ensure AI is deployed ethically and responsibly:

Transparency

AI-driven decisions must be transparent, allowing users to understand how and why decisions are made, free from bias or harmful societal and environmental impacts.

Accountability

Organisations must be accountable for AI decisions, providing clear reasoning and maintaining responsibility for their outcomes.

Fairness

AI systems should be scrutinised to prevent unfair treatment of individuals or groups, particularly in automated decision-making processes.

Explainability

Organisations are required to offer understandable explanations for factors influencing AI system outputs, ensuring that relevant parties can comprehend the reasoning behind AI decisions.

Data Privacy

Robust data management and security frameworks are essential to protect user privacy, ensuring data integrity and compliance with regulatory standards.

Reliability

AI systems must maintain high standards of safety and reliability across all domains to ensure consistent performance and user trust.

Structurally, ISO 42001 is modelled after the Plan-Do-Check-Act (PDCA) methodology, which is also used in other standards like ISO 27001. This structure ensures continuous monitoring, assessment, and refinement of AI systems, guiding organisations through a cyclical process of improvement. By understanding and applying the standard’s clauses and annexes, businesses can build comprehensive AI governance frameworks.

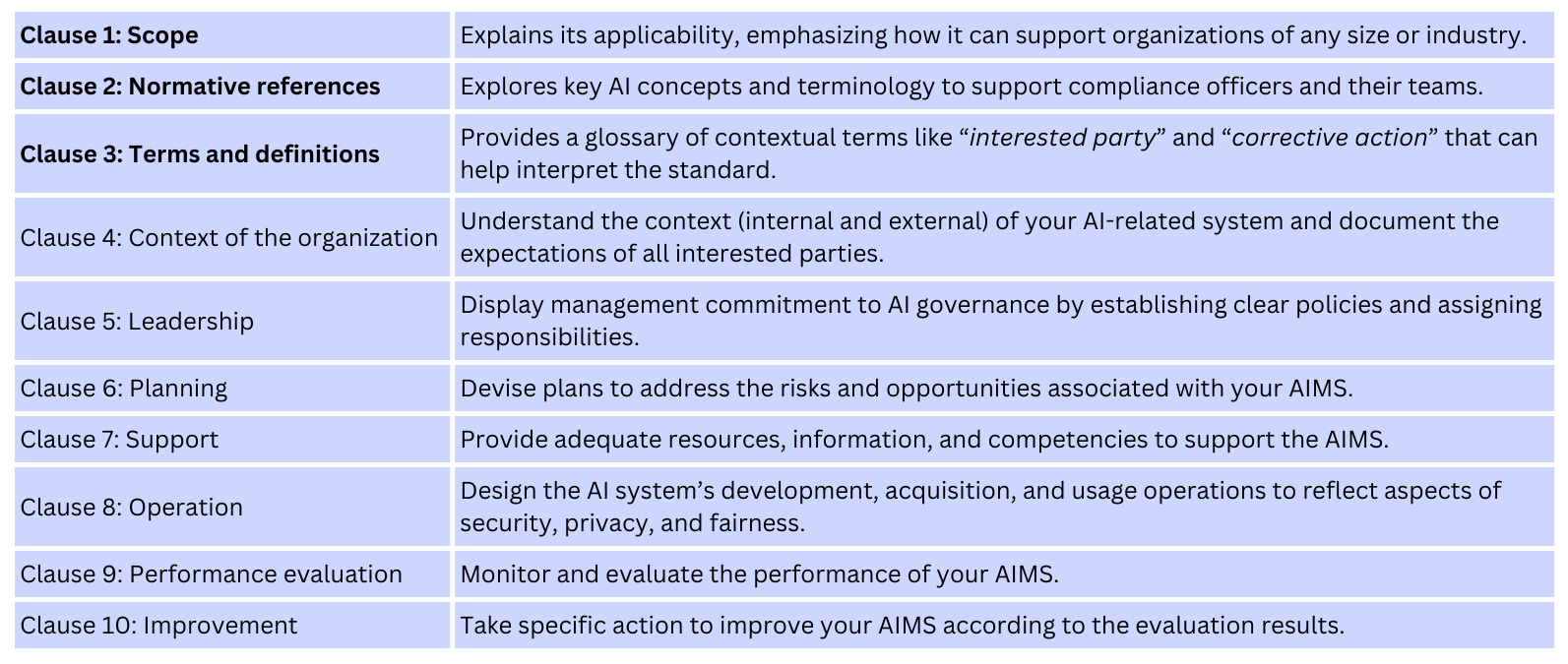

ISO 42001 includes ten clauses. The first three provide foundational information, while the remaining seven clauses outline mandatory requirements for implementing and managing AI systems.

ISO 42001 includes four annexes (A–D) that provide detailed guidelines to support responsible AI governance. Annex A is central to the standard, offering a comprehensive set of controls aimed at the ethical and transparent development, deployment, and management of AI systems. These controls cover several key fields:

Annex A

AI Policy Development and Governance

Managing AI Resources

Evaluating AI System Impacts

AI System Lifecycle Management

Data Quality and Governance for AI Systems

Information Sharing for AI Stakeholders

Responsible Use of AI Systems

Managing Third-Party and Customer AI Relations

The remaining annexes provide additional guidelines:

Annex B: Offers detailed instructions for implementing the controls outlined in Annex A.

Annex C: Focuses on identifying organisational AI objectives and the primary risk factors associated with AI implementation.

Annex D: Specifies standards tailored to particular industries and sectors, helping organisations apply relevant AI governance practices.

These annexes collectively ensure a structured, risk-aware, and industry-specific approach to AI governance.

Implementing ISO 42001 involves several stages and stakeholders across an organisation. Here’s a streamlined process:

While the steps are clear, they can be complex and resource-intensive. Using a robust compliance management system can help streamline the process and overcome technical hurdles.

AI Consulting Group offers expert guidance in aligning with ISO 42001 certification, simplifying the compliance process through a structured approach. They specialise in balancing compliance rigour with practical implementation, ensuring that organisations can manage AI risks effectively while continuing to deliver the benefits of AI and meet standards of transparency, security, and ethics.

Their services include:

By using AI Consulting Group’s platform, you can efficiently manage your compliance processes, engage with experienced advisors, and centralise your AI governance efforts for maximum efficiency and transparency.

For more tailored support, you can book a demo with AI Consulting Group to explore how their solutions can streamline your organisation’s AI Governance journey.

As AI becomes integral to business operations, effective governance ensures responsible use, addressing privacy, security, and ethical concerns. Clear policies help Australian companies meet regulatory standards while building trust with consumers and stakeholders.

AI Consulting Group’s framework carefully aligns to Australian Government Voluntary AI Safety Standard (10 Guardrails), ISO/IEC JTC 1/SC 42 Artificial Intelligence Standards, UNESCO Recommendation on the Ethics of Artificial Intelligence, US National Institute of Science and Technology AI Risk Management Framework.

Get in touch with AI Consulting Group via email, on the phone, or in person.

Send us an email with the details of your enquiry including any attachments and we’ll contact you within 24 hours.

Call us if you have an immediate requirement and you’d like to chat to someone about your project needs or strategy.

We would be delighted to meet for a coffee, beer or a meal and discuss your requirements with you and your team.